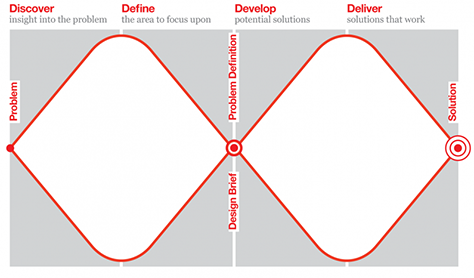

Look at any (of the many, many) design process diagrams out there, and you will see that most have research in common. It might be called ‘discover’ or ‘understand’, but when it boils down to it, understanding the challenge at hand, the context, the user and the scope of the work always requires research to be able to frame the problem appropriately.

UK Design Council’s Double Diamond design process diagram. Image credit: UX Matters

It’s really exciting to see the attention being paid to design research these days, especially as a crucial part of user experience design. Books such as Just Enough Research, podcasts like Dollars to Donuts, and the proliferation of specialized user research roles and even dedicated teams show us the evolution of design research into a modern, vibrant practice.

Designer beware, it’s not all good news! Despite the good traction, there are still pitfalls and common mistakes to look out for in design research. As we kick off 2017, here are three user research resolutions to up our design research game in the upcoming year.

I resolve to… Understand the Difference Between Generative and Evaluative Research

So, you have buy in for research as part of your user experience design process. The team is pumped! You can’t wait to roll up your sleeves and get going! Time to dive in… right?

Well, almost. First you need to hold your research horses and get a few things straight. Research is not a one size fits all process or set of tools. One of the key mistakes I have seen over and over again is not understanding a basic distinction: the difference between generative and evaluative research.

Generative research is part of the problem framing process, and generates an understanding of the problem space, your target audiences’ goals, needs, behaviours, attitudes and preferences. This research is often more open ended and is best approached with a curiosity. It is often the research that is hardest to get support for, as pre-conceived solutions and scopes are the stuff of many digital projects. This research carries the possibility of finding out that the way people think about something is not quite a fit with how your team or company is thinking about it.

Evaluative research is a way of evaluating a possible solution or prototype. It is a way to test existing concepts by getting them into users’ hands and trying them out to understand whether they are usable, accessible and enjoyable to use. This research is often iterative and should take place several times during a product’s development cycle.

If a client asks for a usability test with one of goals being to find out if people would use the product, this is often an indicator that generative research was not done upfront. This is a classic example of trying to use an evaluative research technique in a generative way.

I resolve to… Use the Right Research Approach at the Right Time

Ok, so you’ve got the whole generative versus evaluative thing down, and you’re definitely not planning to do a usability test to understand user needs. Great! So how do we pick a research method to get going with?

A common mistake is to lead with the research method – picking one that there is stakeholder comfort with, or one the team has done before. The reality is that different research approaches will be appropriate at different times.

One differentiator is the type of data you will collect. Qualitative data is directly observed information that helps us to understand the why of a problem. Common methods of collecting qualitative data include interviews, field research and diary studies. Quantitative data is often associated to ‘numbers’ – and is often collected through indirect means, such as analytics programs or surveys. Understanding what type of data will best serve your purpose will help you to choose a research method.

This table from Nielsen Norman can help you to think about what research methods to use at what phase of the project.

Much like the design process, at the outset of a project you will want to choose research methods that help you to diverge – gather lots of rich, qualitative information about people, the problem context, and possible framing. As the project progresses, you will work with convergent methods that help to narrow how you will solve the problem, and evaluate the solutions the design team is producing. One you get to a live product, measuring performance and benchmarking is often done using evaluative, quantitative methods.

The gut check to do when picking a research method is to ask these questions:

- Is this a generative or evaluative method?

- What type of data will it gather (qualitative or quantitative)?

- Where are we in the project lifecycle?

I resolve to… Treat Surveys with the Respect they Deserve

Ah, surveys. Such an efficient, easy way to get the data you need, tick the research box and satisfy stakeholders with easily quantifiable information. Yes?

Beware the survey! Although online tools have made surveys very easy to administer, they come with many risks.

https://stock.adobe.com/stock-photo/person-with-digital-tablet-showing-survey-form/87238233

As you’ve probably guessed, there is a bit more to it than that. A key resolution for design research in 2017 is to stop using surveys as an easy out. As Erika Hall writes, “Surveys are the most dangerous research tool — misunderstood and misused. They frequently straddle the qualitative and quantitative, and at their worst represent the worst of both.”

Surveys carry a few risks. For one, they are very difficult to design effectively. Writing effective survey questions is a tricky thing to do – the Pew Research centre points out that “even small wording differences can substantially affect the answers people provide.” Another risk of surveys is that they provide a relatively quick and easy way to gather larger data sets of information, which can lead to ‘statistics’ that feel very compelling but may be misrepresentative. As the famous quote goes, ‘Lies, damned lies, and statistics.’ Due to the low barrier to entry, it is also very easy to do thoughtless surveys, which will not yield useful data that will move your project forward.

Surveys also often fall foul of the most heinous user research crime. Asking people ‘do/would you like…’, ‘will you buy…’, ‘would you recommend to a friend…’ or ‘how likely are you to…’ is a huge mistake. When we are trying to understand human goals and behaviour, asking people to report on a predicted future behaviour or a subjective opinion is not going to yield reliable data. The work of many behavioural economists such as Daniel Kahnemanand Dan Ariely looks at our challenges in making good predictions about our own behaviour and the future.

If you really need to do a survey, ensure you take the time to learn best practices on question writing, and proceed with caution. One approach is to pair the survey with another research method, preferably a qualitative one that gets directly observed data and makes room for the rich nuance of human experience.

2017 – The Year of Design Research Self Improvement

A deeper understanding of the distinction between design research approaches and methods will lead to greater success on projects. Whether you are a UX team of one, who has to wear many hats, a specialised UX researcher, or a designer within a team who wants to participate more in the research process, keep the resolutions in mind this year! Make the most of the discovery phase, and enjoy the opportunity to flex your design research muscles.